Many API calls will return JSON format and many web apps use JSON which easily moves information around the internet. It is easy to read, and it is easy to parse, even with Excel.

JSON data is a way of representing objects or arrays.

If you are not familiar with Excel Power Tools you can find out about them here. We are also powered with STEEM so you can earn while you learn. This article contains data tables to download so you can practice along and master the art of parsing custom JSON data using Excel.

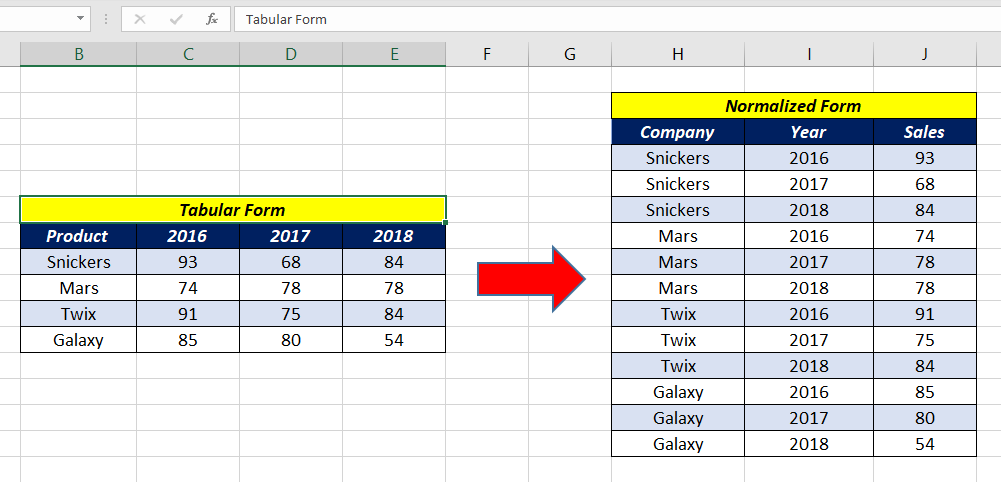

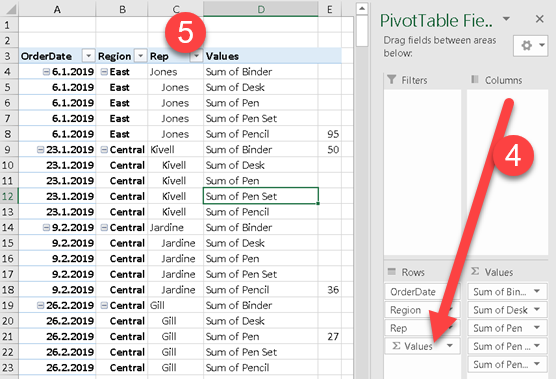

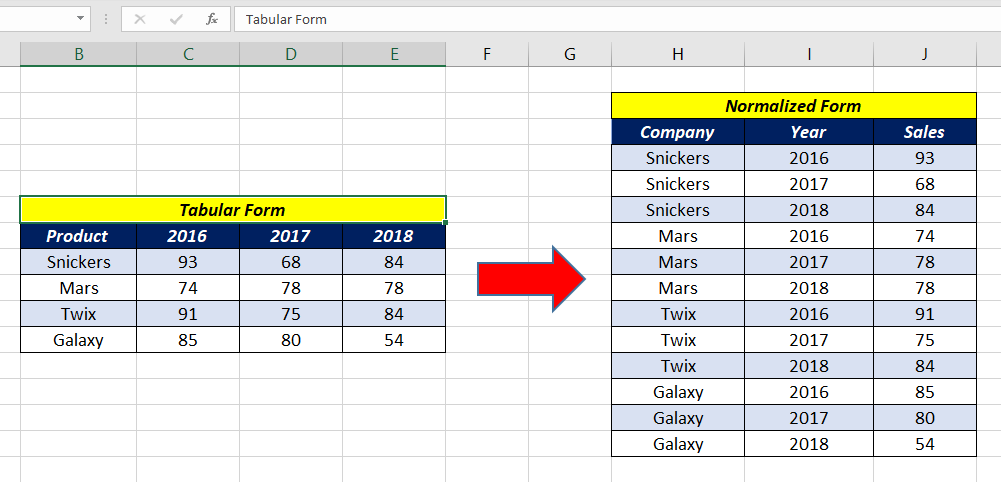

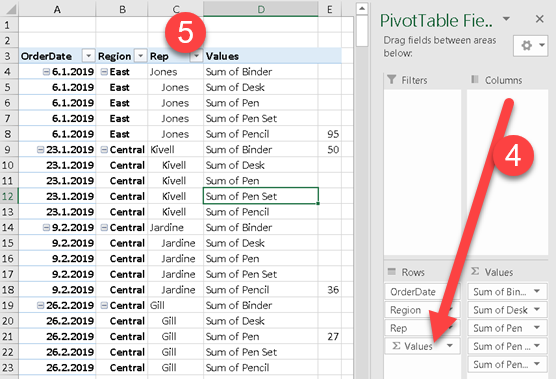

To Parse complex JSON Data using Excels Power Query. How to Parse simple JSON Data using Excels Power Query. However sometimes this data might require a little manipulation to be fully understood and analysed in Excel. And also have seen how PySpark 2.0 changes have improved performance by doing two-phase aggregation.To Parse Custom JSON data is to split out its name/value pairs into a more readable useable format.Įxcel is a powerful tool that allows you to connect to JSON data and read it. We have seen how to Pivot DataFrame with PySpark example and Unpivot it back using SQL functions. UnPivotDF = lect("Product", expr(unpivotExpr)) \ UnpivotExpr = "stack(3, 'Canada', Canada, 'China', China, 'Mexico', Mexico) as (Country,Total)" PivotDF = df.groupBy("Product","Country") \ The complete code can be downloaded from GitHub PySpark 2.0 uses this implementation in order to improve the performance Spark-13749 PivotDF = df.groupBy("Product").pivot("Country", countries).sum("Amount")Īnother approach is to do two-phase aggregation. Version 2.0 on-wards performance has been improved on Pivot, however, if you are using the lower version note that pivot is a very expensive operation hence, it is recommended to provide column data (if known) as an argument to function as shown below.Ĭountries = Pivot Performance improvement in PySpark 2.0 where ever data is not present, it represents as null by default. This will transpose the countries from DataFrame rows into columns and produces the below output. PivotDF = df.groupBy("Product").pivot("Country").sum("Amount") To get the total amount exported to each country of each product, will do group by Product, pivot by Country, and the sum of Amount. It is an aggregation where one of the grouping columns values is transposed into individual columns with distinct data. PySpark SQL provides pivot() function to rotate the data from one column into multiple columns.

0 kommentar(er)

0 kommentar(er)